Community IT Innovators Nonprofit Technology Topics

Community IT offers free webinars monthly to promote learning within our nonprofit technology community. Our podcast is appropriate for a varied level of technology expertise. Community IT is vendor-agnostic and our webinars cover a range of topics and discussions. Something on your mind you don’t see covered here? Contact us to suggest a topic! http://www.communityit.com

Community IT Innovators Nonprofit Technology Topics

Nonprofit AI Framework pt 1

Use Left/Right to seek, Home/End to jump to start or end. Hold shift to jump forward or backward.

Ethics, AI Tools, and Policies. How is Your Philanthropy Using AI?

Community IT was thrilled to welcome two respected leaders, Sarah Di Troia from Project Evident and Jean Westrick from Technology Association of Grantmakers, who shared their informed perspective on AI in philanthropy to share this AI Framework with our audience. You will not want to miss this discussion.

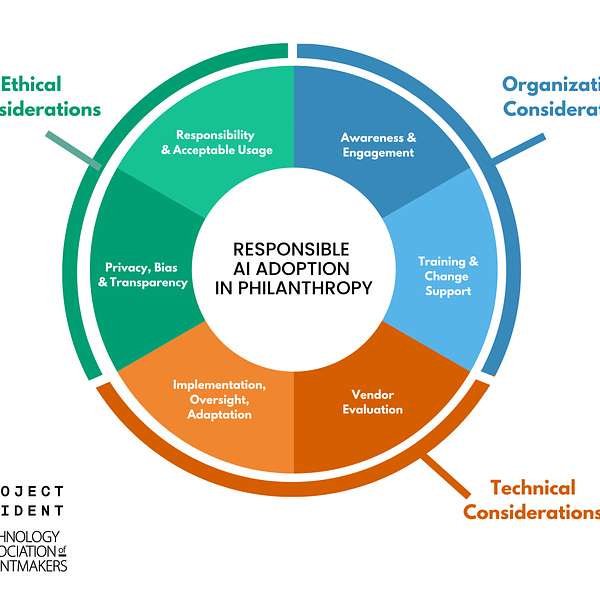

As society grapples with the increasing prevalence of AI tools, the “Responsible AI Adoption in Philanthropy” guide from Project Evident and the Technology Association of Grantmakers (TAG) provides pragmatic guidance and a holistic evaluation framework for grant makers to adopt AI in alignment with their core values.

The framework emphasizes the responsibility of philanthropic organizations to ensure that the usage of AI enables human flourishing, minimizes risk, and maximizes benefit. The easy-to-follow framework includes considerations in three key areas – Organizational, Ethical, and Technical.

Sarah Di Troia from Project Evident and Jean Westrick from TAG walked us through the research and thought behind the framework. At the end of the hour they answered a moderated Q&A discussion of the role of nonprofits in the evolution of AI tools, and how your nonprofit can use the Framework to guide your own implementation and thinking about AI at your organization.

As with all our webinars, this presentation is appropriate for an audience of varied IT experience.

Community IT is proudly vendor-agnostic and our webinars cover a range of topics and discussions. Webinars are never a sales pitch, always a way to share our knowledge with our community.

We hope that you can use this Nonprofit AI Framework at your organization.

_______________________________

Start a conversation :)

- Register to attend a webinar in real time, and find all past transcripts at https://communityit.com/webinars/

- email Carolyn at cwoodard@communityit.com

- on LinkedIn

Thanks for listening.

Hello, and welcome to the Community IT Innovators Technology Topics Podcast, where we discuss nonprofit technology, cybersecurity, tech project implementation, strategic planning, and nonprofit IT careers. Find us at communityit.com.

Thank you for joining this Community IT Podcast, Part 2. You can find Part 1 in your podcast feed if you subscribe wherever you listen to podcasts.

Thank you all for joining us at the Community IT Innovators webinar. Today, we're going to walk through a new AI framework, but together through the research of Project Evident and Technology Association for Grantmakers. My name is Carolyn Woodard.

I'm the Outreach Director for Community IT and the moderator today.

Hello and welcome everyone. I am Jean Westrick. I am the newly appointed Executive Director for the Technology Association of Grantmakers.

Welcome everyone. It's a pleasure to be here in dialogue with all of you about AI. I'm Sarah Di Troia. I'm a Senior Advisor in Product Innovation for Project Evident. We've already spent a bit of time talking about some of the examples when we think about individual organizational or mission attainment. So I'm going to move through these relatively quickly.

How are Nonprofits Using AI Tools?

Individual Use

Sarah Di Troia: Individual use. This is where your organization has the least amount of control. ChatGPT is available. You don’t have control over whether or not your potential grantees are using ChatGPT or Grantable. You also don’t have control whether or not your employees potentially are using that as well. Otter.ai or other transcription services, that’s a big thing that came up when we were doing that large 300-person conversation around the framework. We asked people how AI was coming into their world. Transcription services and the ability to use generative AI to generate copy. Those were two things that were coming up quite a bit.

Organization-wide Use

When we moved to organizational efficiency. Again, it’s those transcriptions and summary services in a Zoom account. So that might be unlike Firefly or Otter that somebody can just send individually. These are tools that you’re provisioning that your technology team has paid for and has turned on.

I think this is an interesting place, because right now, you have a little more control over this than you will in the future. These are new features that are likely available to you through some of your technology providers that are in your tech stack. And increasingly, there’ll be standard features that are in your tech stack that you cannot turn on or turn off.

Right now in Zoom, you can turn it on and off. AI features and grants management software, those are upsells if you’re in Fluxx or Submittable. But I would expect in the future, AI will just be a core part of the software. Deploying Microsoft Copilot, that was just raised recently. Right now, that’s a pretty significant upcharge. I think it’s like a hundred, a hundred plus per seat right now. But I would imagine that price will come down as others offer similar products. And maybe eventually, it’ll simply be a part of the core product and there’ll be something else new and shiny that is an additional charge.

Mission Attainment Use

Finally, if we look at mission attainment, this is around how you really support grant-making decisions or to find grant-making bias.

That was one of the big things that folks were interested in: how do I get better at choosing where I should be funding my money or understand where bias is happening at my grants- making selections already?

Enabling measurement, learning and evaluation (MEL), how do I do that much more broadly across my organization and have it really at the fingertips of folks who are making decisions? Can I use it to analyze a theory of change and to understand gaps and errors?

Landscape analysis. I want to do funding in a new area or even an area I know well, but can this technology be used to help me understand the landscape?

Universal internal search on prior grants and reports. I did mention earlier that I’d talk a little bit about what I’ve seen at some foundations, and the Emerson Foundation has basically gotten rid of their intranet. The internal way that employees can search for information about their company and their benefits, et cetera, that is now all being handled by generative AI. That’s Phase 1. Phase 2 is they’re moving all the grants and the grantee reports into that area. They see it as a way of being able to summarize past knowledge of the foundation very quickly, so that folks can have access to it immediately and be well equipped to be in dialogue with the community, which is really where they want their program officers to be.

Jean Westrick: That’s a great overview of the ways in which AI is being used. So, fear not if you’re feeling a bit FOMO, it’s fine to be where you’re at right now. We’re all in a space of discovery and learning, and trying to figure out what is the best way forward. And that’s why I’m excited to share this framework. Because I think that this is a great place to start.

Nonprofit/Philanthropy AI Framework

So, regardless of your usage, whether you are an individual user, an organizational mission attainment, or you’re thinking about funding a nonprofit enablement; AI adoptions in order to be responsible, need to address some important considerations as part of their decision-making process and their journey.

The framework has three buckets of considerations and we’ll go into deeper detail on each of those.

- Ethical

- Organizational

- Technical

And I will say that these sorts of considerations cannot be handled in isolation or in a vacuum. They’re an opportunity for members across your organization to come together and address these considerations within your organizational context.

And at the heart of each of these is to do it responsibly. To do it ethically is to center the needs of people and think about these considerations in alignment to your organizational values.

I cannot overstate this enough. We are on an AI journey. We’re at the very, very beginning of that journey. And this is going to be a process. This is not like a one and done, where you’ve tested it and you’ve deployed it, and it’s over. These things will get better with time. And also too, your needs will evolve with time.

So, as you think about this, everything should be human-centered. Listening to your key stakeholders throughout the considerations, make sure that you have diverse voices around there, so you’re considering the implications within your context. Thinking about an iteration around a pilot that can help bring those various stakeholders in and do that feedback and such.

The colors on this framework were selected to ensure that the maximum number of people could see it. And so recognizing that almost 5% of the population is colorblind, we selected this color palette to make sure that our technology and our tools meet the needs of the most people possible. And that’s one way you can think about it centering humans is through the accessibility lens.

Organizational Considerations: Your People

Sarah Di Troia: Great. I’ll walk through the elements of how you think about applying. There’s more detail in the document that we’ve linked to really help you get started, but as Jean was saying, everything should be human-centered.

And so we’d like you to:

- finding early adopters. We have some questions about change management. Adopting AI is no different than any other type of significant change that you are managing throughout your organization. And we’ve all certainly had to manage a lot of change over the last five years. But thinking about who are your early adopters, do you already have a group of folks, who are always on the cutting edge of technology? How do you bring those in to really be the champions of experimenting with AI and then talking about AI with their colleagues?

- Creating a strong two-way learning process. This is not something that your technology team is going to do off in a dark closet by themselves. They really have to be in strong two-way engagement with the staff.

- Incorporating staff and, where reasonable, grantees in tool selection. One of the organizations I didn’t talk about was a foster care youth organization that was using precision analytics and they had foster youth who were on their design committee. So really, thinking about human-centered design. Do you have edge users, the folks who are likely most impacted by the system? How do you have them be part of the designing of the system?

- Internal discussions to inform policy and practice. You likely already have a data governance policy and a privacy policy inside your organization. Pulling those out, dusting them off, seeing whether or not they go far enough for you based on where AI is today. They were probably written in a pre-AI time or not considering AI. And how are you circulating and engaging your team on those policies?

We encourage people to think about data governance and data privacy, like diversity, equity, inclusion and belonging work. That is not a one and done activity inside of your organization, nor should privacy and data governance be one and done either. You should consider those as an always-on education and reinforcement opportunity.

- Assess how AI will evolve or sit within your mission and then build internal communications. Again, we’re on this journey as Jean was saying; it’s not going to be static. And so thinking about what is the internal education and communication you want to be using to make sure that everyone’s staying with you on this journey around ethical considerations.

And interestingly, when we talk to people about where you notice that this is a circle graphic; you can start anywhere.

We think that either you should start on the engaging people or you start on the ethical considerations. Those are sort of equally important in terms of where you could start.

Ethical Considerations: Your Mission

We really encourage people to not try and sanction AI. AI, it’s like the internet. It is not a winning strategy to yell at the ocean and tell this tide to stop rising. That is not going to be a win, it might buy you a little bit of time. But as we already said, there’s AI that’s happening outside of your control. It’s better to engage on this initially.

Be mindful about how data should be collected and stored. Thinking about how your data is being collected, thinking about the fact that you have access to and are storing likely lots of data from communities, because that’s the data that’s coming to you through grantees. Being really mindful about how that’s being stored and how it’s being used inside of your organization.

Adapt that data governance policy, if it needs to be adapted.

Setting policy for notifying people when AI is being used. When am I talking to a human? When am I talking to a bot? Is my grant being reviewed by a bot before it’s getting to a human? The understanding of how AI is being used.

Creating the ability for folks to opt out.

Ensuring human accuracy checks. I mean, we don’t have enough time in this webinar to go through the myriad of ways we have been shown that technology in general and AI in particular can fail communities of color, marginalized communities, so making sure that you have human accuracy checks that are built into however you are using AI and enabling people to request a manual review of AI and to share back that generated output.

These are all considerations that were shared by our colleagues and prioritized by them as ways that you can think about privacy bias and transparency, and how to guard against it and responsibility and acceptable usage.

Technical Considerations: AI Tools

Jean Westrick: Technical considerations are really the last conversation that you should be having. I know I’m saying this as someone who is representing the technology space within philanthropy, but a lot of these challenges are not technical challenges per se. They are also human challenges. And that is the hardest part and the place where you should start.

When you think about the technical considerations, you want to make sure you understand the platform you’re using, and what kinds of data privacy and security and biases are built into the model. You want to also understand what are the risks in place. That is really important. I know that there’s a question up here about the Emerson collective example that Sarah used. ChatGPT uses a large language model (LLM) that scrapes a lot of data from a lot of places out there in public.

In terms of the Emerson Collective, it’s a closed large language model. So, a private LLM, that is your data only. You’re going to be able to control data much better rather than putting sensitive data on a third-party platform that can end up in the learning model.

With regards to other technical considerations, you’re going to want to work with a diverse group of folks, who can help identify low-hanging fruits, where you can adopt AI. Think about the things that are ready for automation, that are really transactional, that’s a really good place to start, as opposed to something that is really core to your mission. That’ll be a good place to experiment and understand what the edges are, and also to increase the tech influence within your staff.

With all of these tools, there is a learning curve in order to use them. And so you’re going to have to practice and you’re going to have to allow people to incorporate change in a natural way, because there is a learning curve here.

And then you want to make sure that there’s some oversight and ways in which you can evaluate that.

Just to underscore, Sarah recommended starting at the top of that circle and being able to have a conversation about what your AI vision is and centering people in that conversation, exploring those ethical considerations before you move on to tools and platforms and those sorts of things.

Carolyn Woodard: I think the good news is we are at the end and now is really the opportunity to be able to engage with questions that have been coming in and this phenomenal chat stream that’s been happening this whole time.

Jean Westrick: Yes. We recognize that this framework is really just a starting point. And then I want to underscore that you can find more information on tagtech.org/ai.

We strongly believe that our sector has a duty to balance the potential of AI with the risks and to make sure that we adopt it in alignment to our values. That is key. Our commitment to the work that we do remains centered on our missions for a more just equitable and peaceful world. The genie is out of the bottle on AI, it’s here.

So, it’s our opportunity to embrace this with an informed optimism to make sure that AI adoption is human-centered, prevents harm, and mitigates those risks.

We do it in a way that is iterative, that pays attention to the change journey that everyone’s on.

And then I think the most important question you should ask yourself is when AI isn’t the answer. Just because you buy a hammer doesn’t mean everything is a nail. And so you really have to ask yourself, is this the best way to solve this challenge? Because it may not be.

Q and A

Carolyn Woodard: We do have a couple of questions that came in that I thought were interesting.

What kind of organizational policies do you recommend, and also do you recommend having an organizational AI policy before implementing this AI framework?

Jean Westrick: Great questions. I think certainly an AI usage policy is something organizations need to take up soon. And it’s okay to draft that before you have implemented it. You may need to evolve it and revisit it as you go down the journey.

Sarah also mentioned your data governance framework and your data governance policy. With both of these things, writing the policy is not enough. You have to think about how to create that policy, create it collaboratively and then how you are going to roll it out and socialize it, so people have the training and the knowledge in order to address the policy.

Carolyn Woodard: You may already have data policies, but if they haven’t been revised in the last year, or longer, you might have a starting place already. If you ask around in your organization, you may not have to start from scratch around your data policy. You may have something in there and you add in these AI considerations to it.

Community It has an acceptable use policy template, a place to start from that’s available free on our site.

That doesn’t address all of these other policies, like who has access to your data and the different department’s data. If you’re going to start using AI to query your documents, are there some HR documents in there that aren’t supposed to be in there? You’re going to want to have policies in place for that.

How do you introduce AI to staff that might be afraid of it or have no idea where to start?

Sarah Di Troia: Don’t look at AI differently than you thought about education and evolution you wanted to have in a new organization around diversity, equity, inclusion and belonging.

Think about the comprehensive plan that you put in place, the time you gave the folks you felt like could be examples of where you wanted your culture to be, of what you wanted your norms to be, and how you involved them in the development and the championing of those changes. That has nothing to do with title, by the way. That has to do with who’s a culture carrier inside of your organization.

There are lots of different models out there around change management. But you’ve all been leading change pretty dramatically for the last five years, if not before that.

And so I encourage you to think about those early adopters. I encourage you to not think about title and to think about who holds informal authority, as well as formal authority in your organization. And what are the ways that you can get people comfortable with AI through examples and the fact that people might not understand that Netflix is a recommendation engine. But most people have used Netflix, most people have been on Amazon’s website and it’s offered them other products.

When we talk about AI, it’s not entirely foreign to folks. But it’s helping do the translation of what could a recommendation engine like Netflix mean for us inside of the problems that we’re trying to solve? And as Jean said, you do not want to be the hammer assuming that everything’s a nail.

Look at the persistent barriers or challenges we face in achieving our mission and then really understand, well, is AI something that could help us with some of these barriers? Or frankly, is there something else we could do that would be far less expensive?

A fairly easy example is, there are lots of tools that are available that use AI to support grantees on writing grant applications. Grantable is one of them. There are more and more grants management systems that are providing an AI additional tool to help you summarize grants, process grants, et cetera. If the problem that you were trying to solve is that the grant process is too onerous, the transaction costs are too high, both for grantees and for program officers, putting AI on both sides of that process is a really expensive way to solve that problem. If the problem was the grant process was too onerous, you could change the process. You didn’t have to bring AI on both sides of that problem.

So, I really encourage people to be thoughtful about it. There could be less expensive, less invasive, easier to design solutions to your challenges. Or it could be that AI would be a tremendous opportunity, but have it be a problem-focused conversation, not a technology-focused conversation. Technology’s the means to the end.

Carolyn Woodard: I love that framing of it. I think we hit these learning objectives and I hope you feel that we did too:

- learning about AI, and generative AI. Thank you so much for walking us through those differences and then giving us these amazing use cases and examples. I think that’s really helpful to help pull it all together.

- The framework design principles and the ways AI is being used in philanthropy and nonprofits.

- And that amazing graphic that helps you really think through those three different aspects and the different aspects within those aspects around using AI and where to start from. That framework is available for download on the site https://www.tagtech.org/page/AI.

Thank you, Jean and Sarah for taking us through all of this and have a wonderful rest of your day.